Advances in Classifier-Free Guidance

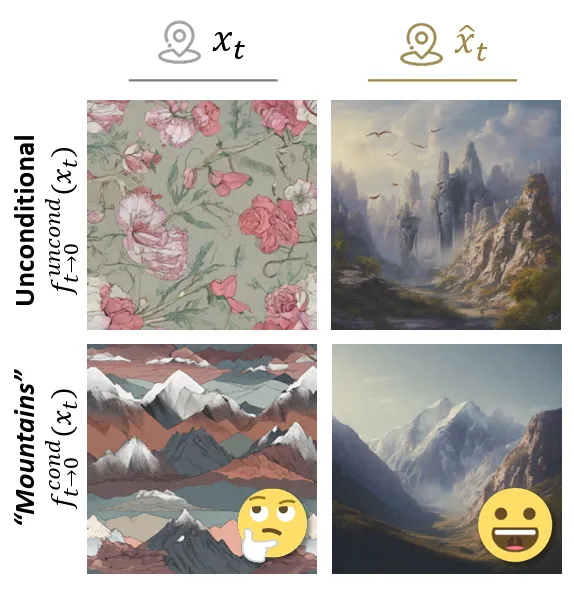

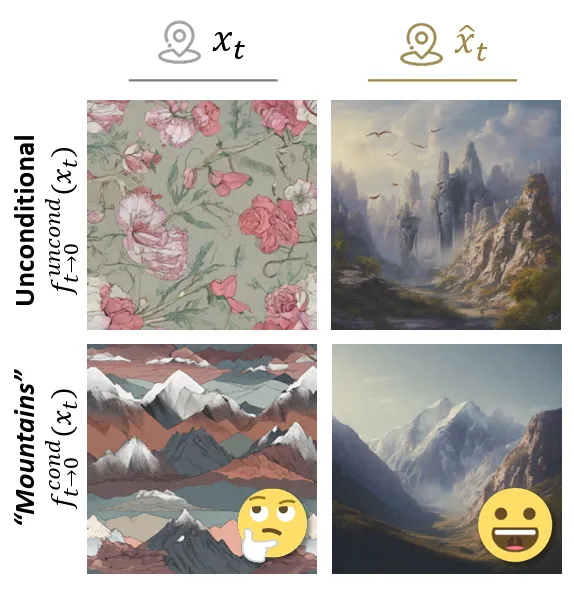

Classifier-Free Guidance (CFG) is essential for conditional diffusion models. This post covers CFG basics and two NeurIPS 2025 papers with new theoretical insights and improvements.

Classifier-Free Guidance (CFG) is essential for conditional diffusion models. This post covers CFG basics and two NeurIPS 2025 papers with new theoretical insights and improvements.

Having just "visited" my first virtual conference, ICLR 2020, I wanted to talk about my general impression and highlight some papers that stuck out to me from a variety of subfields.

From June to September 2019, I took a break from my ongoing PhD and worked as a Research Intern at Spotify in London.

When neural networks need bounded outputs (like audio in [-1,1]), tanh or clipping during training causes vanishing gradients. Use linear outputs during training and clip only at test time.

This year's ISMIR was great as ever, this time featuring lots of deep learning, new datasets, and a fantastic boat tour through Paris!